Apple and Samsung, two dominant entities in the consumer electronics market, have reignited a critical flaw in Extended Reality (XR) interface design that Meta effectively addressed over five years ago. Both tech giants unveiled gaze-based selection systems in their latest spatial computing devices, assuming it would streamline user interaction. However, this flawed interaction model previously deprecated by Meta during the Oculus Quest development phase has resurfaced with identical usability limitations: eye fatigue, selection inaccuracy, and cognitive load.

Meta, through its Reality Labs division, already documented the ergonomic failures of using gaze as a primary input vector in immersive environments. In contrast, Apple’s Vision Pro and Samsung’s XR device rely on gaze anchoring combined with pinch gestures, prioritizing visual minimalism over interaction fidelity. The recurrence of this outdated design paradigm highlights a disconnect between design aesthetics and functional human-computer interaction models in the XR ecosystem.

The following sections examine why Meta abandoned gaze-based control, how Apple and Samsung repeated the same interaction mistakes, and what the future of spatial UI must include to align with natural user behavior and semantic input consistency.

Why Did Apple and Samsung Repeat a Known XR Design Flaw?

Apple and Samsung both implemented gaze-based input systems in their latest XR devices Apple Vision Pro and Samsung’s upcoming XR headset despite Meta having deprecated that model due to major user experience issues in Oculus Quest interfaces years ago. Both companies relied on visual gaze anchoring and finger pinch combinations for selection, which Meta abandoned in 2018 due to eye fatigue, imprecise focus tracking, and high cognitive friction.

User testing from Meta Reality Labs revealed that gaze-based selection introduced latency in action execution. Meta’s interface design moved toward hand-tracking and controller-based raycasting with haptic feedback, which proved more intuitive and less mentally taxing. Apple and Samsung neglected these findings in favor of “cleaner” UI designs that ultimately reduce interaction speed and precision in dynamic 3D environments.

How Did Meta Solve XR Interaction Latency and Precision Problems?

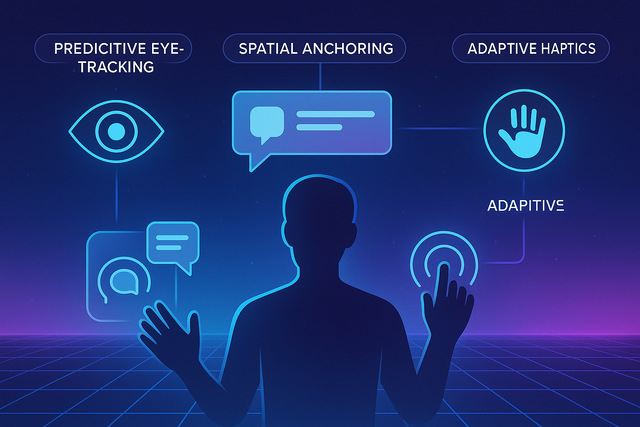

Meta introduced spatial anchors, hand-tracking input models, and direct selection via pose-predicted raycasting. These systems bypassed the issues created by eye-based targeting by aligning interaction models closer to natural human ergonomics.

Spatial anchors provided persistent UI placement based on user movement, reducing cognitive dissonance. Pose-predicted raycasting using controllers or hands allowed higher degrees of precision and repeatable targeting, especially in multitasking environments. Meta also integrated haptic feedback loops, reinforcing action verification and enhancing usability for extended sessions. These approaches addressed fatigue, inaccuracy, and inconsistent gaze tracking, which still affect the latest Apple and Samsung systems.

What Are the Core Problems with Gaze-Based Selection in XR?

Gaze-based selection causes user fatigue, eye strain, and accidental triggers due to natural eye movement patterns. Human eyes are not designed to act as cursors; tracking them for UI navigation results in jittery input, poor UX flow, and cognitive overload.

Foveated rendering systems prioritize visual clarity at the center of gaze, but they do not inherently support stable interaction points. Users frequently shift gaze involuntarily, which leads to inadvertent UI focus changes. This breaks task continuity. Additionally, eye-tracking calibration errors further reduce interface reliability, particularly during rapid movement or in environments with variable lighting.

Why Did Apple and Samsung Prioritize Gaze Interaction Anyway?

Both companies focused on reducing visual clutter and hardware dependence. Apple aimed for a minimalist spatial computing interface, using eye direction as a selection pointer and pinch gestures for action. Samsung followed suit, leveraging similar tech in partnership with Google.

Their goal was to present “invisible computing” experiences. By removing traditional controllers, they hoped to increase immersion and reduce onboarding time. However, this approach ignored the long-term ergonomic and UX costs of relying on unstable visual anchoring. Meta’s past research showed that visual minimalism cannot compensate for interactive instability.

What Are the Future Alternatives to Improve XR UI Stability?

XR interface evolution is likely to shift toward multimodal interaction systems: combining hand-tracking, spatial gestures, voice commands, and optional controller input. These methods provide redundancy, adaptability, and user choice.

Context-aware systems leveraging AI to predict intent based on environment and task will reduce the need for exact gesture or gaze alignment. Neural input models, like the ones Meta is developing through CTRL-Labs, show promise in bypassing traditional hand-eye coordination systems entirely.

Interface reliability, not minimalism, defines long-term XR adoption. Companies that prioritize adaptable, fatigue-free input methods will win user retention in the spatial computing race. For more informative articles related to News you can visit News Category of our Blog.