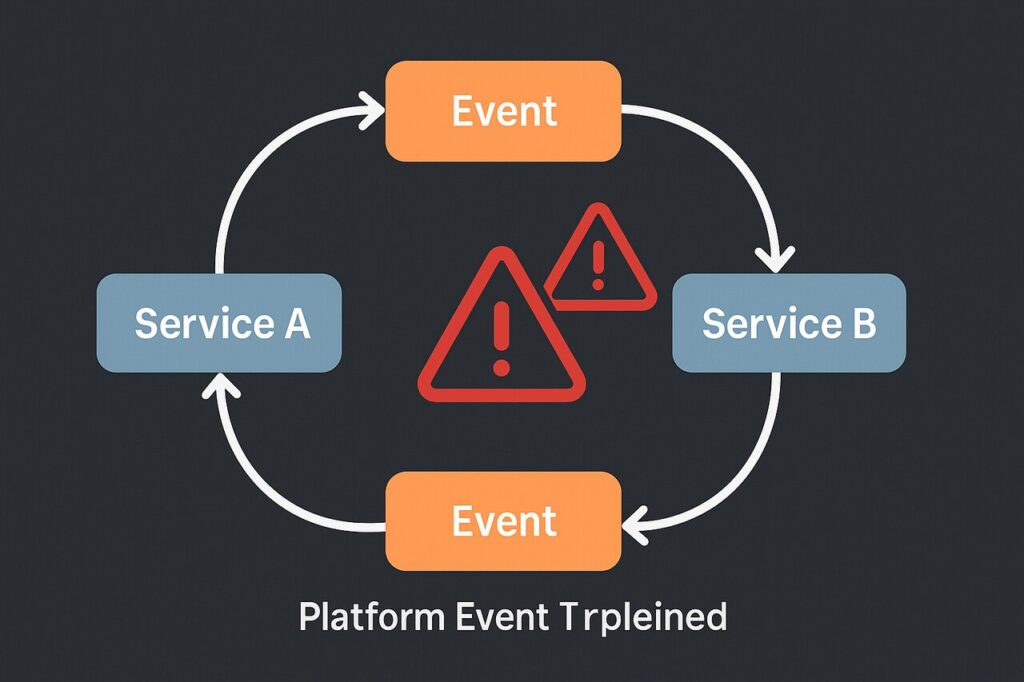

Understanding how failures emerge in event-driven workflows is essential for designing resilient, scalable software. A platform event trap is one of the most subtle and damaging hazards in asynchronous architectures. It occurs when event publishers, subscribers or retry mechanisms interact in a way that causes recursion, duplication, or unbounded processing. This article breaks down how these traps form, how to detect them, and how to design future-proof event systems that prevent infinite loops and maintain data integrity.

Before diving into the mechanics, the table below summarizes key attributes of the concept.

Identify How a Platform Event Trap Forms

Detecting the precursor conditions behind a platform event trap is the first step toward controlling it. The most immediate requirement is identifying any workflow in which a consumer generates new events connected to the same subscription path, creating the potential for unintended feedback. When event logic lacks clear boundaries, even simple updates may re-emit events that retrigger their own handlers. This behavior starts subtly, often unnoticed during development, before escalating in production when workloads and concurrency greatly increase.

Recognizing the elements involved requires analyzing every event publisher and consumer to determine what state changes trigger events, which handlers subscribe to these events, and how downstream processing interacts with upstream triggers. The most common contributors include circular publications, unguarded field updates, retry loops that reprocess the same input, and overly broad subscription filters. Each pattern introduces a weak point that can accelerate feedback, leading to runaway event emission.

Most systems display early warning signs long before a full collapse occurs. Queue length spikes, unexpected increases in handler execution frequency, or duplicate data updates often indicate that one or more events are being re-introduced into the pipeline unintentionally. Identifying their origin involves examining handler logs, trace IDs, replay positions, and recent configuration changes. Once the relationships between handlers and publishers are mapped, the root cause becomes evident and remediation can begin.

Analyze the Causes Behind Unintended Recursion and Duplication

Pinpointing the drivers behind event traps requires examining how handlers interact with the event bus. Many traps emerge when a handler publishes the same event type it consumes, or when multiple handlers create circular dependencies by emitting related events in response to one another. These patterns flourish when development teams assume events will only fire under predictable conditions, overlooking edge cases that create hidden feedback loops.

Causal factors also include missing safeguards such as idempotency keys, duplicate detection, conditional logic around state changes, and validation of triggering criteria. Without these checks, events may reproduce themselves after retries, concurrent updates, or restitution workflows. This is especially common when retry policies are strict, causing the same event to execute multiple times before being offloaded to a dead-letter queue, if one exists.

Deepening the analysis reveals how concurrency amplifies the risk. High-volume systems often process events simultaneously, meaning two subscribers may operate on a shared record and emit parallel events that cause unintended interactions. Database commit behavior adds another dimension: when events are published before or after state changes commit, timing discrepancies can cause replays, misinterpretations, or downstream handlers believing no work was performed. Understanding these nuances helps distinguish routine processing from a full event trap.

Assess Symptoms and Risks Before System Degradation Occurs

The clearest symptom of a platform event trap is rapid, repeated event publication without corresponding business activity. These loops inflate event counts and trigger redundant handler runs that degrade performance. Logs often reveal identical messages appearing multiple times, sometimes with increasing latency as the queue overflows. Systems relying on synchronous bridges may experience timeouts or throttling whenever recursive flows intensify.

Common risks include data corruption, billing overages on event platforms, and loss of state consistency. A trap may also cause partial writes, where certain handlers complete and others fail, leaving the system in a contradictory state. Performance degradation is another major outcome, as event handlers compete for processing time and databases receive excessive traffic. Under extreme conditions, queue exhaustion prevents legitimate events from being processed, interrupting critical business operations.

Systems with weak visibility suffer the most because traps may operate silently until they reach scale. When execution logs lack trace identifiers, it becomes difficult to associate related events. Missing metrics around handler frequency, queue depth, or replay counts make detection even harder. By the time alerts fire, the issue may have propagated across multiple services or microservices, complicating resolution. Early detection is essential to limiting impact.

Implement Practices That Prevent Event Recursion and Duplication

Establishing strong prevention strategies ensures event flows remain predictable and resilient. The foundational measure is ensuring that handlers avoid emitting events unnecessarily, especially events tied to the same subscription path. Safeguards such as conditional triggers, version checks, and controlled emission rules prevent handlers from blindly publishing follow-up events. Developers must treat every event publish as a potentially cascading action.

Idempotency is a critical component of prevention. When systems enforce idempotent processing, duplicate events no longer disrupt downstream logic. Techniques include using idempotency keys, storing processed event identifiers, or designing handlers so that repeated calls produce the same end state. These techniques ensure retries, replays, or concurrent deliveries cannot cause runaway side-effects.

Finally, prevention requires rethinking subscription design. Avoid broad filtering criteria that capture too many events, and ensure subscriber responsibilities are clearly bounded. Using message routing, partitioning, and topic separation limits the chance of accidental recursion. Comprehensive testing, including replay simulations and load testing, helps validate that event flows behave correctly under real-world conditions.

Apply Design Patterns That Increase Safety and Resilience

Several well-established design patterns reduce trap risk. The single-responsibility handler pattern ensures each handler performs one well-defined action and does not emit unrelated events. The state transition pattern ensures events represent meaningful and discrete changes, preventing redundant publications during partial updates. Temporal filters help suppress repetitive events triggered within short windows.

Another effective practice is using a central event registry. This registry documents every event type, publisher, subscriber, and trigger rule. Maintaining this registry avoids overlapping responsibilities that can unknowingly create circular dependencies. Similarly, asynchronous workflow orchestration frameworks help coordinate events in a predictable sequence, preventing cycles across microservices.

Dead-letter queue strategies must also be incorporated. When handlers fail repeatedly, events are moved aside instead of being retried indefinitely. This protects the pipeline from accumulating problematic events. Coupled with smart retry policies, such as exponential backoff or conditional retries, the system remains stable even under stress.

Strengthen Monitoring to Detect Traps in Real Time

Effective monitoring identifies traps before they cause system failure. Monitoring must track queue depth, handler execution rate, execution latency, error counts, and event throughput. When readings exceed thresholds, automated alerts should notify engineers. Tools like trace IDs, correlation IDs, or distributed tracing platforms make it easier to follow an event’s full journey through the system.

Logging must be structured, consistent, and searchable. Handlers should log event IDs, states before and after execution, and any conditional logic that governs publication. Without these logs, diagnosing recursive behavior becomes guesswork. Metrics around dead-letter queue utilization and replay counts also serve as early signals that a trap may be forming.

Visibility should extend into system dashboards where real-time behavior can be observed. Graphs showing sudden spikes in event volume or handler execution frequency instantly reveal anomalies. With these insights, teams can intervene quickly by pausing subscriptions, limiting throughput, or redirecting workloads.

Refactor Event Flows in Systems Already Showing Trap Behavior

Remediating an active trap requires careful intervention that avoids additional disruptions. The first step is isolating the malfunctioning handler or event pair. Temporarily disabling problematic subscriptions prevents further recursion while analysis proceeds. Once stabilized, developers can inspect the event payloads, handler logic, and recent configuration changes to identify the specific feedback mechanism.

Refactoring often involves restructuring event logic so that state changes are validated before publication. Another technique is separating high-risk events into their own topics or channels, thereby preventing them from intersecting with handlers that previously caused recursion. Updating retry policies or adjusting filtering criteria further reduces the chance of future traps.

To avoid downtime, gradual rollouts and canary releases help test new event flows safely. These approaches validate whether the modifications resolve the trap without affecting unrelated functionality. Once verified, the updated configuration can be promoted into broader release environments.

Evaluate Platform Features That Influence Trap Behavior

While platform event traps occur across all event-driven systems, different technologies introduce unique conditions. Salesforce Platform Events are susceptible to trap formation when Apex triggers recursively publish events in response to updates. The tight coupling between object changes and event triggers in Salesforce demands precise control of handler logic. Without explicit recursion guards, even minor field updates can multiply into runaway chains.

Kafka presents a different profile. Because Kafka retains event history and allows replay, handlers may consume old events if offsets reset unexpectedly. If consumers republish processed messages without idempotency keys, duplication becomes inevitable. Concurrency is a major factor here, as multiple consumers may process partitions independently, increasing the complexity of ensuring safe event publication.

AWS EventBridge minimizes some risks through rule-based filtering and integration boundaries. However, broad event patterns or cross-account routing may still form loops when downstream systems echo events back into the bus. Understanding how each platform handles retries, ordering, and dead-letter queues is essential to determining where traps may arise and how aggressively they must be mitigated.

Use Intentional Recursion Safely in Controlled Scenarios

There are rare situations where intentionally re-emitting an event is appropriate. Audit workflows commonly rely on recurring messages that capture each state transition. Compensating transactions in distributed systems may also use recursive events to unwind operations during failure recovery. Similarly, replay mechanisms in event-sourced architectures intentionally reprocess historical events to rebuild system state.

These patterns are permissible only when strict safeguards are in place. Every recursive flow must enforce idempotency, monotonic state transitions, and bounded iteration. Documentation is equally important so future developers understand the logic and do not mistake controlled recursion for runaway behavior. Monitoring must also include targeted alerts for these flows to detect unexpected changes in rate or volume.

Balancing flexibility with safety requires determining which workflows legitimately need recursion and which should avoid it entirely. By evaluating business requirements, system load characteristics, and platform capabilities, architects can design event systems that support advanced functionality without becoming fragile or unpredictable.

Comparison Table: Trap Risk Across Common Event Platforms

| Platform | Primary Trap Risk | Root Causes | Risk Level |

|---|---|---|---|

| Salesforce Platform Events | Trigger recursion and duplicate updates | Apex triggers emitting new events on update | High |

| Apache Kafka | Replay-driven duplication | Offset resets, reprocessing, redundant publishers | Medium-High |

| AWS EventBridge | Cross-rule loops | Broad routing rules, echo events | Medium |

| Message Queues (RabbitMQ etc.) | Retry storms | Poor retry policies, lack of idempotency | Medium |

Conclusion

A platform event trap arises when event flows behave in ways that recursively trigger themselves, duplicate work, or overwhelm the event bus. These traps degrade performance, corrupt data, and harm system reliability. By designing event handlers with clear boundaries, enforcing idempotency, strengthening subscription logic, and leveraging monitoring tools, teams can dramatically reduce the likelihood of such failures. When traps do form, structured remediation and careful refactoring restore stability without major downtime. Understanding platform-specific risk factors further equips developers to build robust, predictable event-driven architectures. For more informative articles related to Tech’s you can visit Tech’s Category of our Blog.

FAQ’s

Normal processing delivers each event once and triggers predictable behavior. A trap occurs when events reproduce, loop, or multiply unintentionally, causing unbounded execution.

Yes. If a handler publishes an event that triggers the same handler again, the system can enter a self-reinforcing cycle.

Idempotency ensures duplicate events do not alter the system more than once, preventing duplicate updates or recursive side effects.

They prevent retry storms by isolating repeatedly failing events instead of letting them re-enter the processing cycle.

Yes. When events arrive out of order, handlers may misinterpret state and publish unnecessary follow-up events that trigger loops.

Watch for surging queue depth, repeated identical events, unusually high handler execution rates, or unexplained spikes in processing load.